Jaeyoung Lee | Curriculum Vitae | Resume Research Fellow (2024.01~Present) at Centre for AI Fundamentals (Computer Science) University of Manchester, Manchester, UK. Research Associate (2021.02~2022.03) / Postdoctoral Fellow (2018.01~2021.01) at Waterloo Intelligent Software Engineering (WISE) Lab. (Electrical and Computer Engineering) University of Waterloo, Waterloo, ON, Canada. Postdoctoral Fellow (2015.9~2017.12) at Reinforcement Learning and Artificial Intelligence (RLAI) Lab. (Computing Science) University of Alberta, Edmonton, AB, Canada.

Education Ph.D. in Electrical & Electronics Engineering, Yonsei University, Seoul, South Korea, 2015 Area of Specialization: Reinforcement Learning and Optimal Control [ Ph. D. dissertation ] B.E. in Information and Control Engineering, Kwangwoon University, Seoul, Korea, 2006 Major: Information & Control Engineering. Minor: Electronic Engineering

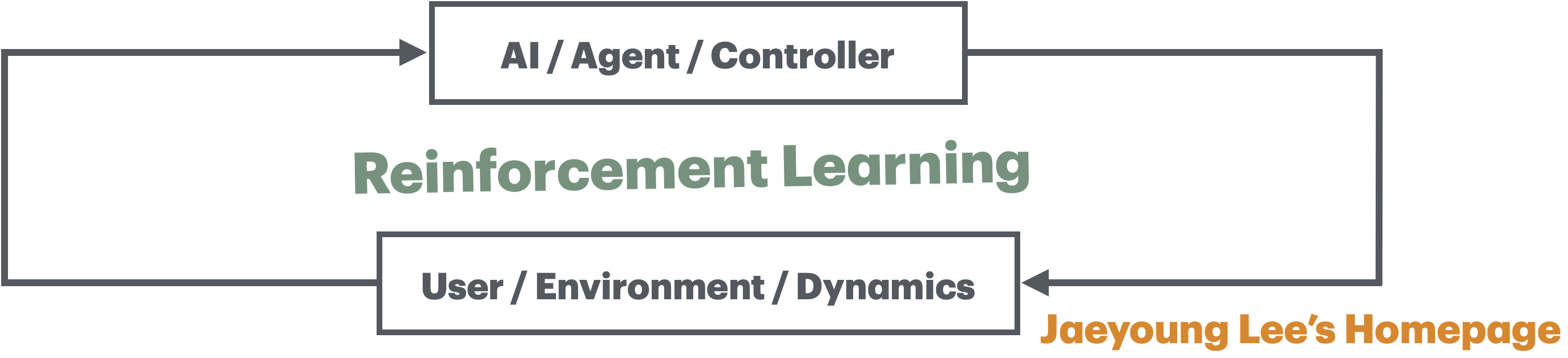

Area of Specialization: Reinforcement Learning Current Research Interests: User-Modelling and AI-Assistant 1) Inverse (constrained) reinforcement learning with computational rationality 2) Model-based Bayesian reinforcement learning 3) Experimental-design-based exploration and exploitation trade-off Other Research Interests on Decision-Making and Optimal Control 1) Safe/deep reinforcement and imitation learning, e.g. for autonomous driving 2) Reinforcement learning and optimal control in continuous domain 3) Control, game and multi-agent theory, e.g. for autonomous driving

Selected Publications

Topic 1. Constrained Reinforcement Learning for Safety-critical Systems Lee, J.*, Sedwards, S.* & Czarnecki, K. (2023). Uniformly Constrained Reinforcement Learning. [ Springer ] Journal of Autonomous Agents and Multi-Agent Systems, 38(1), 51 pages.

Lee, J.*, Sedwards, S.* and Czarnecki, K. (2021). Recursive Constraints to Prevent Instability in Constrained Reinforcement Learning. [ arXiv | video | slides ] In: Proc. 1st Multi-Objective Decision Making Workshop (MODeM 2021). Online at http://modem2021.cs.nuigalway.ie. (cited: 1) Topic 2. Distillation and Imitation of Deep Q-Network by Decision Tree, for Formal Verification Abdelzad, V.*, Lee, J.*, Sedwards, S.*, Soltani S.* and Czarnecki, K. (2021). Non-divergent Imitation for Verification of Complex Learned Controllers. [ IEEEXplorer | video | slides ] In: 2021 International Joint Conference on Neural Networks (IJCNN). Shenzhen, China (virtual). (cited: 1) Jhunjhunwala, A., Lee, J., Sedwards S., Abdelzad V. and Czarnecki K. (2020). Improved Policy Extraction via Online Q-value Distillation. [ IEEEXplorer ] In: 2020 International Joint Conference on Neural Networks (IJCNN, in 2020 IEEE WCCI). Glasgow, U.K. (cited: 2) Topic 3. Deep Reinforcement Learning (for Autonomous Driving) Lee, J.*, Balakrishnan, A.*, Gaurav, A.*, Czarnecki, K. and Sedwards, S.* (2019).

WiseMove: a Framework to Investigate Safe Deep Reinforcement Learning for Autonomous Driving.

In: Parker D., Wolf V. (eds) Quantitative Evaluation of Systems. QEST 2019. Lecture Notes in Computer Science, vol. 11785.

Balakrishnan, A., Lee, J., Gaurav A., Czarnecki, K. and Sedwards, S. (2021). Transfer Reinforcement Learning for Autonomous Driving: from WiseMove to WiseSim. [ ACM | Git ] In: ACM Transactions on Modeling and Computer Simulation (TOMAC), 31(3), article no. 15, 26 pages. (cited: 1) Lee, S., Lee, J. and Hasuo I. (2020 & 2021).

Predictive PER: Balancing Priority and Diversity towards Stable Deep Reinforcement Learning.

In: (1) 2021 International Joint Conference on Neural Networks (IJCNN). Shenzhen, China (virtual). (cited: 1)

Topic 4. Reinforcement Learning and Optimal Control in Continuous Domain Lee, J. and Sutton, R.S. (2021). Policy Iterations for Reinforcement Learning Problems in Continuous Time and Space: Fundamental Theory and Methods. [ Elsevier | arXiv | Git ] Automatica, 126, 109421, 15 pages. (cited: 38) Lee, J. and Sutton, R.S. (2017).

Policy Iteration for Discounted Reinforcement Learning Problems in Continuous Time and Space.

In: 2017 Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM). Ann Arbor, MI, USA. (cited: 1) Lee, J.Y., Park, J.B. and Choi, Y.H. (2012). Integral Q-learning and Explorized Policy Iteration for Adaptive Optimal Control of Continuous-time Linear Systems. [ Elsevier | preprint | Git ] Automatica, 48(11), pp. 2850~2859. (cited: 177) Lee, J.Y., Park, J.B. and Choi, Y.H. (2014).

Integral Reinforcement Learning for a Class of Nonlinear Systems with Invariant Explorations.

IEEE Transactions on Neural Networks and Learning Systems, 26(5), pp. 916~932. (cited: 109) Lee, J.Y., Park, J.B. and Choi, Y.H. (2014).

On Integral Generalized Policy Iteration for Continuous-time Linear Quadratic Regulations.

Automatica, 50(2), pp. 475~489. (cited: 35) Topic 5. Multi-agent Consensus Techniques; Applications to Vehicles' Formation Control Lee, J.Y., Choi, Y.H. and Park, J.B. (2014).

Inverse Optimal Design of the Distributed Consensus Protocol for Formation Control of Multiple Mobile Robots.

In: Proc. 53rd IEEE Conference on Decision and Control (CDC), pp. 2222~2227. Los Angeles, CA, USA. (cited: 4) Lee, G.U., Lee, J.Y., Park, J.B. and Choi, Y.H. (2018). On Stability and Inverse Optimality for a Class of Multi-agent Linear Consensus Protocols. [ Springer ] International Journal of Control, Automation and Systems (IJCAS), 16(3), pp. 1194~1206. (cited: 6)

Other Materials: Studies and Self-writings on Measure Theory and Integrations Study Materials on Folland, G.B. (2013). Real Analysis: Modern Techniques and their Applications. John Wiley & Sons and more... Lee, J. (2022). Real Analysis, Probability, and Random Processes with Measure Theory (in progress) [PDF]

|

Jaeyoung Lee @ Christabel Pankhurst Building, Dover St, Manchester, United Kingdom M13 9PS. Mobile: +44 07918 718261.